Feature scaling

Feature scaling is a technique, performed in Pre-Processing stage, that is done on the independent variables making the associated values of the particular variable in a fixed range.

Why we need 'Feature Scaling' ?

Some machine learning models give more emphasis on the data of the independent variables over variables itself. That's why Scaling plays a very crucial role in such cases where we have to scale our data according some techniques(discussed later). By applying Feature Scaling we are actually giving the same priority to all the independent variables present in the dataset.

Machine learning models that are affected by Feature Scaling -

Machine learning models or algorithms, involved in distance measurement, are affected by the feature scaling. some of them are

- KNN ( K-Nearest Neighbors ) [Used for Regression/Classification]

- PCA ( Principal component Analysis ) [used for Dimensionality reduction ]

- K-means algorithm [used for Clustering(Hierarchical clustering) ]

Different Techniques used for Feature Scaling -

There are many techniques involved for feature scaling. But two of them are mostly used. They are

- Min-Max Scalar

- Standard Scalar

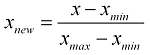

Min-Max Scalar

- It is a scaling technique used for Normalizing the data.

- This Min-Max Scalar are greatly affected by the outliers present in the data.

- it assumes that data is not distributed as Gaussian nature.

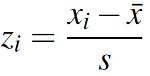

Standard Scalar

- It is a scaling technique used for standardize the data.

- Standard Scalar are not affected by the outliers present in the data

- It assumes that data is normally distributed.

Difference between Standardization and Normalization -

Standardization means making the mean of the values 0 and variance of them 1. On the other hand,

Normalization means making the values lie in between 0 and 1.

There are Some other scaling techniques such as

- Max Abs Scaler

- Robust Scaler

- Quantile Transformer Scaler

- Power Transformer Scaler

- Unit Vector Scaler

Python practice

- First importing required library and checking the correlation matrix of the data-frame.

Conclusion

Form this post we have learnt about the concepts related to Feature Scaling which is very much a necessary steps while preprocessing the data in some machine learning model and algorithms.

Comments

Post a Comment