Logistic Regression

In the previous post we have discussed what is Regression and and what is Linear Regression in depth.

In this Post we are going to see about LOGISTIC REGRESSION, a classification technique, used in MACHINE LEARNING.

What is Logistic Regression?

Logistic Regression is another supervised, statistical, predictive model used for classification mostly in 2 classes. But this technique can be used for multinomial classification i.e. more than 2 classes using some method like 'OVR'(ONE VS REST), 'multinomial', 'auto' etc.

Note: method will be selected depending upon the solver by default. For more info click here.

Logistic Regression - A Regression Problem

This is a very important concept. Actually Logistic regression is implemented using Linear regression.

At the end there is a conversion method(discussed later) to make sure the result i.e. the probability for each class lies between 0 and 1. (0 < P(A) < 1).

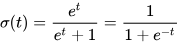

In the above, two pictures, only a Regression line has used for classifying into male and female. But the problem is, if predicted value using the regression line can lie -∞ to +∞. For this problem a sigmoid function is used to make the value lie in between 0 to +1.

1+e−z

This function is known as sigmoid function.

Different other function

There are many other functions also. But having the similarity with the probability definition, Sigmoid function is used. The other functions like -

- Linear

- Tanh

- ReLU

- LeakyReLU

- ELU

- Softmax

Note: This type of activation functions are mainly used in Deep Neural networks(DNN).

To listen this page please click the link

Basics of Logistic Regression

1. In Logistic Regression also using linear model the dependent variable(Y) is expressed in terms of independent variables(X1, X2, X3).

Y = b0 + b1*x1+b2*x2+b3*x3

Here, we have taken 3 independent variables only.

2. Now we take the probability of Y using the sigmoid function.

P(Y) = 1/(1+e^Y)

This P(Y) will be in between 0 to 1.

3. The model will select a class of which has highest probability between all the classes.

4. Internally, like Linear Regression, the model uses a function called Log Loss function, just like gradient Decent Function in Linear Model, by which the weights (or coefficients) gets calculated.

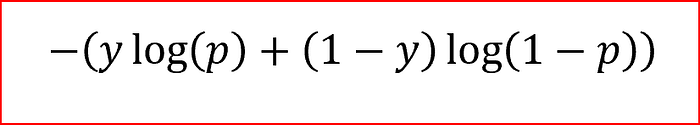

Log Loss function is also known as Cross Entropy Loss Function. Yi is the probability of actual class and the second one is the predicted probability[P(Y)].

Note: For Binomial classification the prevision result will be -

|

| y-Actual output(i.e. 1 or 0), p-probability predicted by the logistic regression |

Note: For Multinomial classification the logistic regression model will generate N number of sigmoidal curve for classification where N is the class present inside the Data Set.

Example: MNIST dataset(Digits classification), model will generate 10 sigmoidal curve for classify 10 digits.

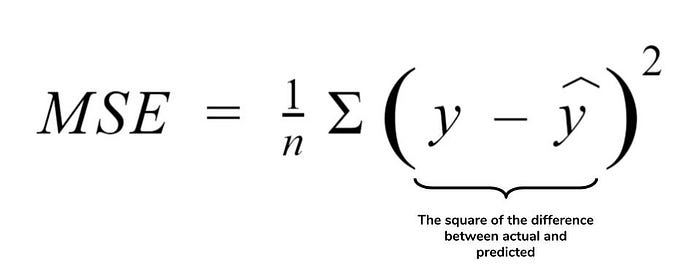

MSE Estimation can not be applied in Logistic Regression problem

- MSE(mean squared error) is a error estimation technique mainly used in Linear Regression.

- In Logistic Regression, we can not use MSE. we know that the actual Y will be 0 or 1 and the predicted Y will be in-between 0 and 1. This value is very less and if we square that term that will become very very less than 1. And for that we have to use very high floating point precision which is difficult.

Decision Boundary

A decision boundary is the region of a problem space in which the output label of a classifier is ambiguous. If the decision surface is a hyperplane, then the classification problem is linear, and the classes are linearly separable.

Conclusion

In this post we have learnt about the Logistic Regression, supervised predictive model, and some notable concepts about Logistic regression.

Comments

Post a Comment