Linear Regression

What is Regression?

A measure of the relation between the continuous dependent

variable(e.g. output) with one/many discrete/continuous independent

variables(e.g. input).

In Machine Learning, Regression is a supervised statistical predictive

model.

Other statistical models are Descriptive model , Prescriptive model

etc.

Different Types of Regression model in Machine Learning

In Machine learning, There are mainly Three types of Regression model.

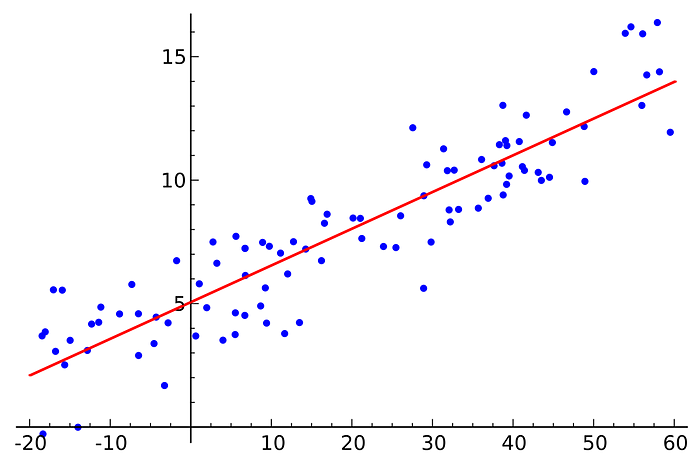

- Linear Regression Model

In this type of predictive model, The relationship between the Independent

and Dependent Variables can be represented as straight

line(single/multiple particularly where clusters are present).

- Polynomial Regression Model

- Logistic Regression Model

In this post we are going to see about the most simple REGRESSION MODEL (LINEAR)

To listen this page please

open the player

Basics of Linear Model in Machine Learning:

Here, using linear model we express the dependent variable(Y) in terms

of independent variables(X1, X2, X3)

Y = b0 + b1*x1+b2*x2+b3*x3

Here, we have taken 3 independent variables only.

We choose the best fit straight line by minimizing the mean of sum of

squared error(MSE).

From that if we minimize the summation of squared error then also we can

able to minimize the above expression. This function is called Cost

function.

From the cost function we can get the coefficients(or weights) of each

independent variable by equating the first derivative of the cost function

equals to Zero.

NOTE:

If we have bivariate data, then from the above expression we will get a

very beautiful result.

Y = b0 + b1*x [ For Bivariate Data ]

Then we will get these result, given as -

b1 =

cov(x,y)/var(x)

b0 = E(y)-b1*E(x)

where E(x) and E(y) gives the mean of x and y respectively.

Reasons for using MSE(mean squared error) over MAE(mean absolute error)

- Cost function, as being quadratic function, is a continuous differentiable function. That's why we get a global minima that ensures the best fit line.

- On the other hand, |x| function is continuous but a non differentiable function we can not do the previous steps.

Disadvantage of using MSE(mean squared error)

- If the Error is very large then the square of it will become a very large number. Then for our simplicity we will use different types of error estimation function

Conclusion:

From the above concept we have learnt about What Regression problem is and

What is linear regression. For the next concepts please go though the other

posts of Data Science.

Comments

Post a Comment